Ray Train#

automatically handles multi-node, multi-GPU setup with no manual SSH setup or hostfile configs.

define per-worker fractional resource requirements, for example, 2 CPUs and 0.5 GPU per worker.

run on heterogeneous machines and scale flexibly, for example, CPU for preprocessing and GPU for training.

built-in fault tolerance with retry of failed workers and continue from last checkpoint.

supports Data Parallel, Model Parallel, Parameter Server, and even custom strategies.

Ray Compiled graphs allow you to even define different parallelism for jointly optimizing multiple models like Megatron, DeepSpeed, etc., or only allow for one global setting.

You can also use Torch DDP, FSPD, DeepSpeed, etc., under the hood.

🔥 RayTurbo Train offers even more improvement to the price-performance ratio, performance monitoring and more:

elastic training to scale to a dynamic number of workers, continue training on fewer resources, even on spot instances.

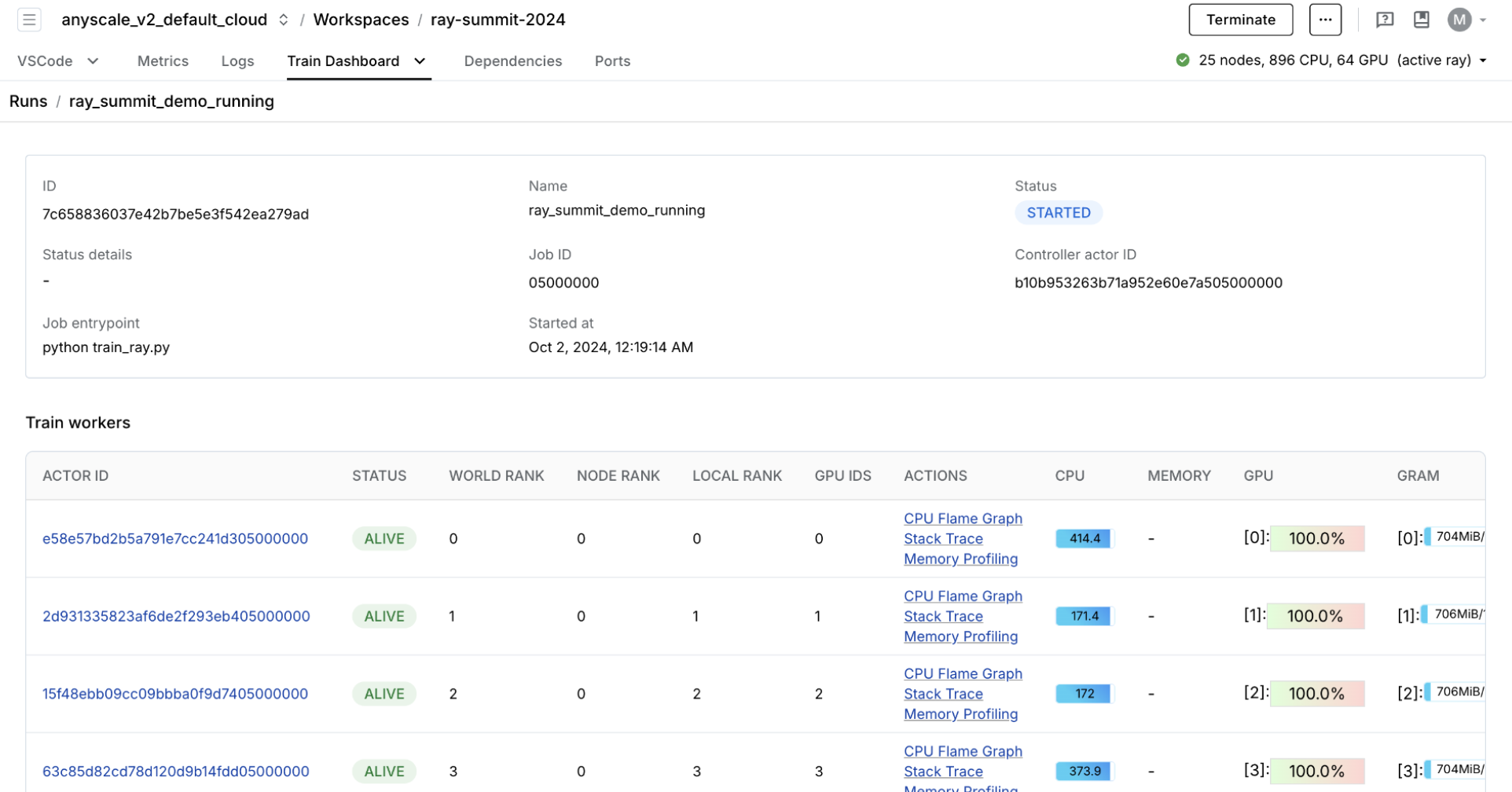

purpose-built dashboard designed to streamline the debugging of Ray Train workloads:

Monitoring: View the status of training runs and train workers.

Metrics: See insights on training throughput and training system operation time.

Profiling: Investigate bottlenecks, hangs, or errors from individual training worker processes.

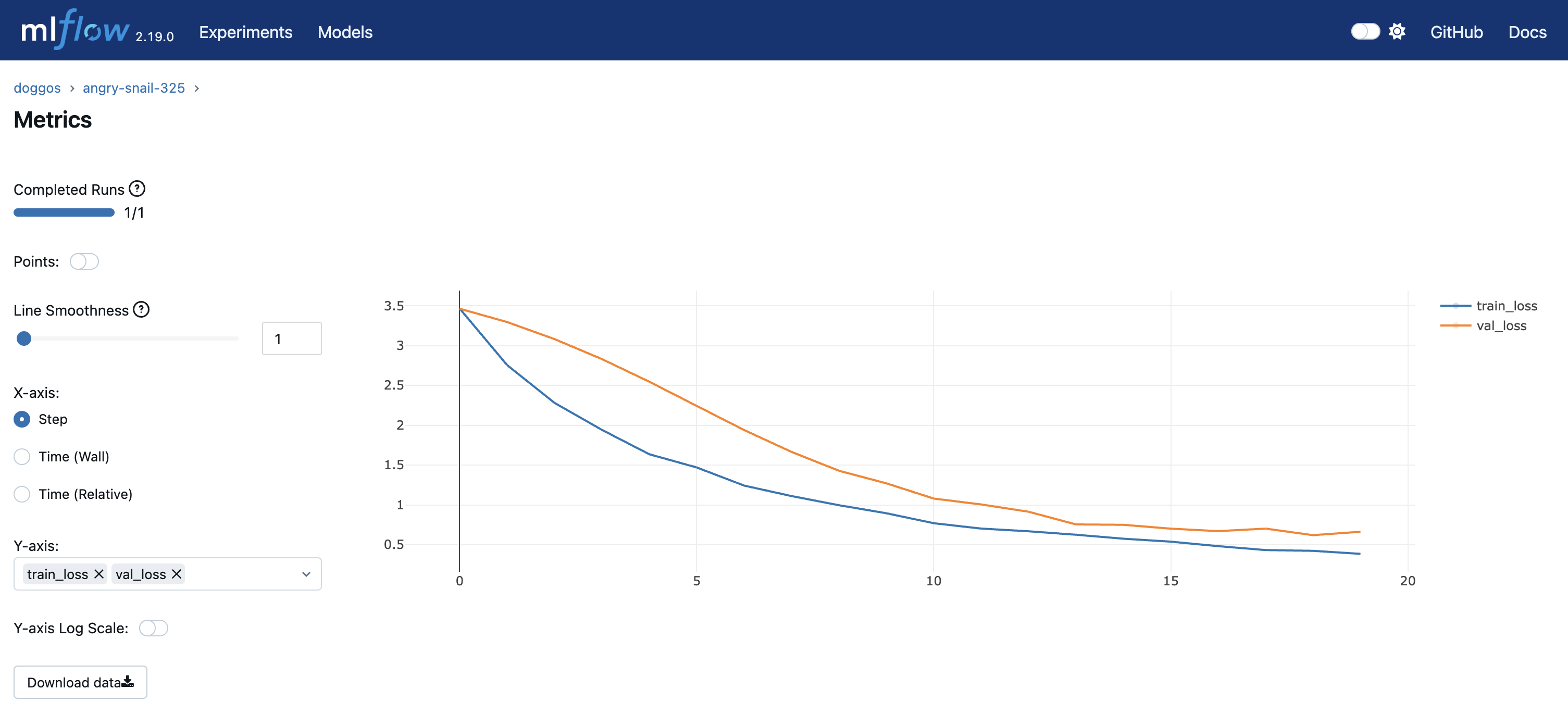

You can view experiment metrics and model artifacts in the model registry. You’re using OSS MLflow so you can run the server by pointing to the model registry location:

mlflow server -h 0.0.0.0 -p 8080 --backend-store-uri /mnt/cluster_storage/mlflow/doggos

You can view the dashboard by going to the Overview tab > Open Ports.

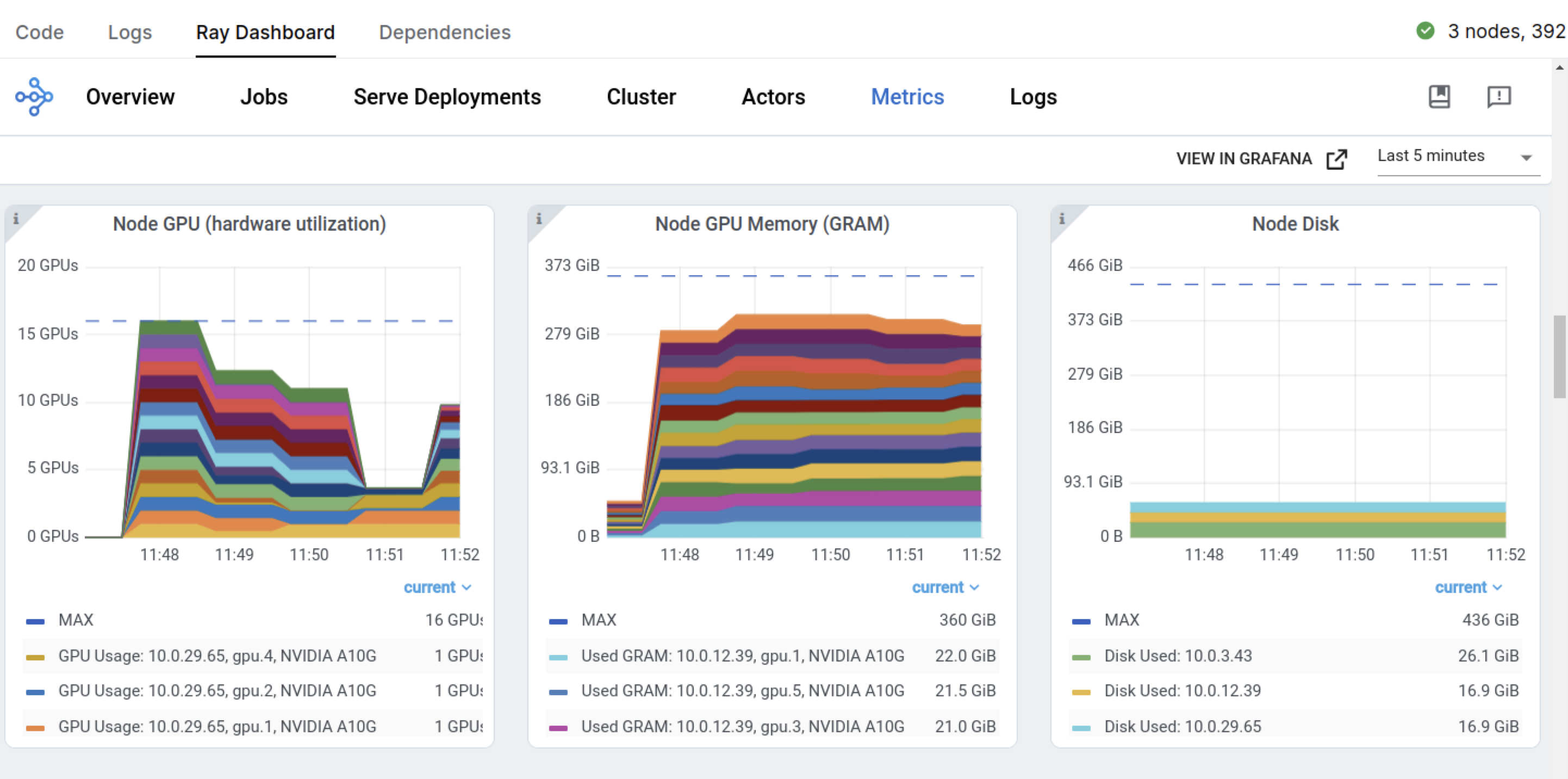

You also have the preceding Ray Dashboard and Train workload specific dashboards.

# Sorted runs

mlflow.set_tracking_uri(f"file:{model_registry}")

sorted_runs = mlflow.search_runs(

experiment_names=[experiment_name], order_by=["metrics.val_loss ASC"]

)

best_run = sorted_runs.iloc[0]

best_run

run_id fcb9ef8c96f844f08bcd0185601f3dbd

experiment_id 858816514880031760

status FINISHED

artifact_uri file:///mnt/cluster_storage/mlflow/doggos/8588...

start_time 2025-08-22 00:32:11.522000+00:00

end_time 2025-08-22 00:32:32.895000+00:00

metrics.train_loss 0.35504

metrics.val_loss 0.593301

metrics.lr 0.001

params.lr_patience 3

params.dropout_p 0.3

params.num_epochs 20

params.lr 0.001

params.num_classes 36

params.hidden_dim 256

params.experiment_name doggos

params.batch_size 256

params.model_registry /mnt/cluster_storage/mlflow/doggos

params.class_to_label {'border_collie': 0, 'pomeranian': 1, 'basset'...

params.lr_factor 0.8

params.embedding_dim 512

tags.mlflow.user ray

tags.mlflow.source.type LOCAL

tags.mlflow.runName enthused-donkey-931

tags.mlflow.source.name /home/ray/anaconda3/lib/python3.12/site-packag...

Name: 0, dtype: object