Deployments#

First create a deployment for the trained model that generates a probability distribution for a given image URL. You can specify the compute you want to use with ray_actor_options, and how you want to horizontally scale, with num_replicas, this specific deployment.

@serve.deployment(

num_replicas="1",

ray_actor_options={

"num_gpus": 1,

"accelerator_type": "T4",

},

)

class ClassPredictor:

def __init__(self, model_id, artifacts_dir, device="cuda"):

"""Initialize the model."""

# Embdding model

self.processor = CLIPProcessor.from_pretrained(model_id)

self.model = CLIPModel.from_pretrained(model_id)

self.model.to(device=device)

self.device = device

# Trained classifier

self.predictor = TorchPredictor.from_artifacts_dir(artifacts_dir=artifacts_dir)

self.preprocessor = self.predictor.preprocessor

def get_probabilities(self, url):

image = Image.fromarray(np.uint8(url_to_array(url=url))).convert("RGB")

inputs = self.processor(images=[image], return_tensors="pt", padding=True).to(self.device)

with torch.inference_mode():

embedding = self.model.get_image_features(**inputs).cpu().numpy()

outputs = self.predictor.predict_probabilities(

collate_fn({"embedding": embedding}))

return {"probabilities": outputs["probabilities"][0]}

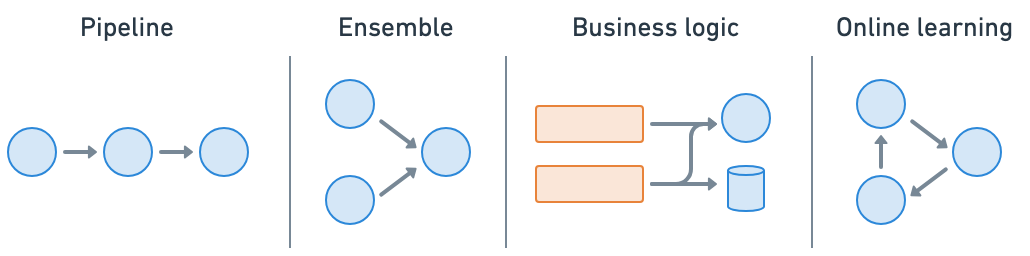

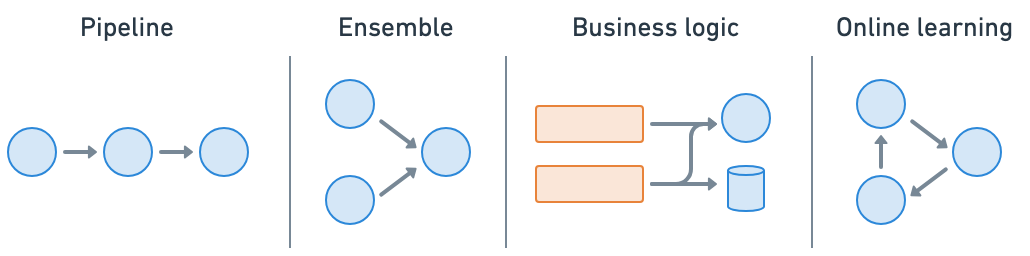

🧱 Model composition

Ray Serve makes it easy to do model composition where you can compose multiple deployments containing ML models or business logic into a single application. You can independently scale even fractional resources, and configure each of your deployments.